|

|

2006 PrologueI'm in the process of

writing, or trying to write, a book on Follow Through. This process is both

slow and painful — slow because I have to plow through mountains of

material from the 1960s and 70s, painful because much of what I revisit

opens vistas of horror that I have tried hard not to revisit. The good news

is that the process has forced me to contact some people I really respect

and like a lot. Letter to Wilson

|

November 16,

1977 Dear Ms. Wilson: The critical Follow Through issue is a moral one. We have demonstrated the capacity to teach you and other educators about teaching "poor kids", turning them on, and assuring that they catch up to their middle-class peers in academic skills. Our Follow Through achievements, however, don't show what we are actually capable of doing, because we do not have fully-implemented sites – only moderately implemented sites. The Follow Through guidelines have never permitted the kind of total system support needed to provide a full-fledged demonstration of what poor kids can achieve in grades K-3 if they receive a fully implemented, uniform, Direct Instruction approach (with trained teachers, supervisors, and directors). The moral issue centers on this question: What does Follow Through stand for? Is it simply an experiment on human beings which has no concern for what the experiment might reveal for the millions of other "poor kids" who have serious educational needs? Is your office even remotely justified in treating all sponsors as equals and shifting (in the past two years) the emphasis from sponsors to individual sites? Or is this move designed to detract from the issues of effective approaches and make it seem that "every approach is capable or producing good results"? We would not have engaged in Follow Through for the past ten years if we had thought that there would be no attempt to weed out the inadequate model approaches, to educate both the public and the educational community about how to be effective, and to disseminate information on how to be an effective model. Furthermore, we would not have perpetuated our model if it proved to be a hoax; rather, we would have quit Follow Through with abject apology if the results had shown that our approach did not work any better than that of the typical Title I program and produced kids who performed only at the 18th percentile in reading and arithmetic by the end of the third grade. According to

your letter of October 28, 1977, "No single instructional approach,

including that of Direct Instruction, was found to be consistently

effective in all of the projects where it was tried and evaluated.

Therefore, no overall claim of its effectiveness can be supported." |

|

|

|

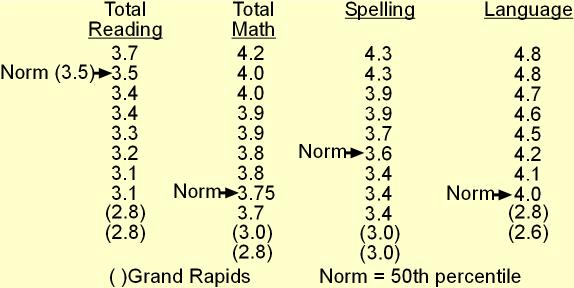

This statement flies in the face of the USOE's own Evaluation Synthesis (John Evans' office) indicating that there is one model generally effective in basic skills, cognitive skills, and on affective measures – Direct Instruction. Your statement also flies in the face of the Abt Report data – not merely in the face of data showing the relative superiority of Direct Instruction over comparison groups, but also in the face of absolute grade norm data that shows Direct Instruction to be the only approach to bring third graders at or near grade level in reading, arithmetic, spelling, and language. Your conclusion that no single instructional approach was found to be consistently effective is spurious because: (1) Grand Rapids is included as a Direct Instruction site (for two data points); and (2) the conclusion confounds program effect variability with control group variability. Variability in program implementation must take into account absolute grade-level performance. Grade-level performance is clearly as relevant as statistical significance because if all kids were performing at grade level (on the average), there would have been no need for Follow Through. In fact, much of the rhetoric that led to Follow Through by Robert Kennedy, Commissioner Howe, Bob Egbert, and Jack Hughes, focused on the fact that poor kids perform well below grade level and are therefore preempted from "higher education" and its concomitants. The so-called

"variability" among the Direct Instruction sites is accounted

for first by the unwarranted inclusion of Grand Rapids as a Direct

Instruction site for two cohorts. Grand Rapids is not a Direct Instruction

site. It is a Lola Davis site. You know that for most of 1972 and

1973, we did not function as a sponsor for Grand Rapids, that we

did not receive funds for servicing Grand Rapids after the spring

of 1973 when relationships with Grand Rapids were severed. You also

know that Grand Rapids was not functioning as an implemented Direct

Instruction site before the 1972-73 school year. It was not implemented

– not because of our lack of effort – but because the

director had different ideas about what and how to teach. You were

involved in this situation and know quite well our position and

the history. Grand Rapids is the only Direct Instruction K-starting

site that performs relatively low in absolute performance.

In fact, Grand Rapids performs an average of ½ standard deviation

below the mean of other Direct Instruction K-starting sites. Examine

the following summary tables that are based on data from Abt 3 and

Abt 4, which show medium grade norms for Direct Instruction sites.

|

|

|

|

Although there is a variability, note that most of the variability is above the national norm (with the removal of Grand Rapids). Grand Rapids is the only site that consistently falls below national norm. Even the most casual inspection of these data suggests that Grand Rapids is "different". And you know that it is different because it has not been implemented as a Direct Instruction site. In absolute data, Direct Instruction, even with Grand Rapids included, out-performed the other sponsors by one-fourth to one full standard deviation on MAT Total Reading, Language, Total Math, and Spelling. Furthermore, we can document the fact that what can be achieved through the Direct Instruction approach is only partly reflected in the table above. We can show, for example, that implementation was not fully achieved in any site and that we can do better. Our questions to you are: Wouldn't it behoove Follow Through to pursue the possibility that what we are saying is true? Wouldn't it be of potential value for educating disadvantaged kids to know what really could be done with optimal implementation? Wouldn't it be valuable to have a benchmark, a standard of excellence, that establishes what can be done and that can therefore serve as a goal for other projects and schools more generally? In your letter,

you assert: "Since 1968, the program emphasis . . . has been There are two problems with this statement. The first is that it is historically false. The second is that it places you on the horns of a serious dilemma. 1. The historical facts are these:

|

|

|

|

|

|

| 2. One horn

of your dilemma is this:

If you deny (which you apparently have done) the sponsor concept of Follow Through and deny that what was to be measured was sponsor capability, not just the capacity of different sites to produce programs, you make it transparently clear that Follow Through actually conned individual sponsors into thinking that their efforts would be reinforced if they produced positive results and that good approaches – those with uniform potential – would be disseminated. You admit that Follow Through is a sham and that all sponsors would be equals – those achieving student performance at the 50th percentile and those at the 15th percentile.

On the other hand, if you accept the sponsorship philosophy of Follow Through and the idea that the game was, from the beginning, an attempt to find out about methods, programs, approaches that are effective across different sites, different types of kids, and different ethnic groups, you are faced with the conclusion that you are now supporting many sponsors that have shown precisely no capacity to produce, and in fact are showing alarmingly consistent negative results.

We do not envy your position. However, we do not have to reinforce it and continue to be a part of an obvious travesty. We wanted to show what could be done for the kids. After nearly ten years, we find that although we succeeded, we have been rejected – not merely by the outside educational establishment from whom rejection would be a natural response – but from the agency that has funded us, that required us to hold to a moratorium on publishing comparative data before 1975, that repeatedly suggested possible expanded funding of successful models, that posed as something more elegant than a fancy Title I program. It seems apparent, however, that Follow Through at the National Level, has become a bureaucracy with no apparent advocacy for the needs of children. What other

form of significant protest do we have other than quitting Follow

Through, and severing association with the kind of non-relevant

educational agencies that we have tried to fight during the past

years?

|

|

|

ReferencesAbt Associates, Education as Experimentation: A Planned Variation Model, Vol. 3, 1976, Vol 4, 1977, Cambridge, Mass. Abt Associates. Haney, W. The Follow Through Planned Variation Experiment, Vol. 5: A Technical History of the National Follow Through Evaluation, Cambridge Mass., Huron Institute, 1977. Nero and Associates, A Description of Follow Through Implementation Processes, Portland, OR, Nero and Associates, 1975. Egbert, R.,

"Planned Variation in Follow Through," unpublished manuscript,

presented to Brookings Institution's Panel on Social Experimentation.

Washington, D.C.; April 1973. |

|

|

Letter to Becker and Engelmann

| [Letterhead, Dept of Health, Education, and Welfare] |

|

DEPARTMENT OF HEALTH, EDUCATION, AND WELFARE

OFFICE OF EDUCATION WASHINGTON D.C. 20202 DEC 19, 1977 |

| Dr.

Wesley C. Becker Dr. Siegfried Engelmann Professors of Special Education University of Oregon College of Education Eugene, Oregon 97403 Dear Sirs: This is in response to your letter to me of November 16, 1977, in which you stated among voluminous other assertions, that a statement I had made in an earlier reply to you of October 28, 1977, was "historically false." Although, as Director of the Follow Through program, I have many constructive demands upon my time that prevent my entering into what now threatens to become an endless exchange of unproductive, widely distributed, correspondence between you and myself; I am compelled to respond to that charge of falsehood. Since, on October 28, I was writing a letter to you and not a position paper as you have now done, I felt it unnecessary to provide annotation of the sources upon which I based my statements. I do so now, however, in view of this charge which you have so widely circulated. The Follow Through Program Manual (draft), dated February 24, 1969, was prepared and used while this program was under the direction of Dr. Robert Egbert, whose later unpublished writings your paper quotes extensively. The program manual contains on page 2, the following statement under B. Planned Variation in a Context of Comprehensive Services.

"In February of 1968, the U.S. Office of Education invited a limited number of communities (recommended by State officials) to participate in a cooperative enterprise to develop and evaluate comprehensive Follow Through projects, each of which incorporates one of the alternative 'program approaches' as part of its comprehensive Follow Through project. Generally, each of the current program sponsors concentrates on only a portion of the total Follow Through project. The remainder of the program is developed by the local community with consultant assistance." |

|

|

| Based on the

above quotation, I made the following statement in response to your

suggestion that this office recommend your Direct Instruction Model

to the Joint Dissemination Review Panel.

"This is not reasonable in that it directly conflicts with the OE's decision that projects, not "models" would be presented to the JDRP. This was a carefully considered decision based on the following facts. In 1968, I was not in employ of the U.S. Office of Education. The draft program manual to which I refer, however, contained the guidelines used by the Follow Through program and its grantees to govern their operations until June 21, 1974 when, under my direction, the Follow Through program published in the Federal Register as an interim final regulation, its first official regulation (39 FR 22342 et. seq.) I add that "quitting Follow Through" as a form of "significant protest" was your decision. In my opinion, it is a very destructive one to the Follow Through program and to the "poor kids" to whom you profess commitment.

|

|

|

Epilogue

So that was the final word. As it turned out, Wilson was replaced with Gary McDaniels, who earlier had been director of Follow Through research. He was a good guy, and he convinced us to do another round of Follow Through, although the JDRP thing was a done deal. We agreed to continue as a Follow Through sponsor. This version lacked the teeth that the earlier one had, but we worked with kids in San Diego, Seattle, Bridgeport, Connecticut, Camden, New Jersey, and Moss Point, Mississippi. These sites produced more good results and more rejection by the school districts we worked with. When it was all over, Wes and I felt pretty bitter. Wes' bitterness would lead him not only to retire from education, but to refuse to talk about it – not a sentence. He and I had started writing a book on Follow Through. In fact we had large parts of 11 chapters completed (which remained in stale-smelling folders until I started this process of trying to write the book without going crazy). Wes wouldn't talk about the book, had no interest in completing it, and would just say things like, "I don't talk about those things any more." That was the tragic final chapter for someone who had contributed more than John Dewey, Horace Mann, or any other recognized educational visionary.